Welcome

Overview

Teaching: 60 min

Exercises: 0 minQuestions

Who is sitting next to me?

What are we going to do in this workshop?

Objectives

Overall view of the workshop

Getting to know each other

Key Points

Listen to Assembly lecture

Overview

Teaching: 45 min

Exercises: 0 minQuestions

Objectives

Key Points

The dataset

Overview

Teaching: 15 min

Exercises: 5 minQuestions

What is metagenomics?

What do we call viral dark matter?

Where does the dataset come from?

What format is the sequencing data?

Objectives

Understanding what is a metagenomic study.

Understanding how the samples in the dataset are related.

Collecting basic statistics of the dataset.

Before anything else, download the file containing the conda environment file, create the environment in your machine, and activate it.

# download the file describing the conda environment

$ wget https://raw.githubusercontent.com/MGXlab/Viromics-Workshop-MGX/gh-pages/code/day1/day1_env_file.txt

# create the environment, call it day1_env

$ conda create --name day1_env --file day1_env_file.txt

# activate the environment

$ conda activate day1_env

Metagenomics

The emergence of Next Generation Sequencing (NGS) has facilitated the development of metagenomics. In metagenomic studies, DNA from all the organisms in a mixed sample is sequenced in a massively parallel way (or RNA in case of metatranscriptomics). The goal of these studies is usually to identify certain microbes in a sample, or to taxonomically or functionally characterize a microbial community. There are different ways to process and analyze metagenomes, such as the targeted amplification and sequencing of the 16S ribosomal RNA gene (amplicon sequencing, used for taxonomic profiling) or shotgun sequencing of the complete genomes in the sample.

After primary processing of the NGS data (which we will not perform in this exercise), a common approach is to compare the metagenomic sequencing reads to reference databases composed of genome sequences of known organisms. Sequence similarity indicates that the microbes in the sample are genomically related to the organisms in the database. By counting the sequencing reads that are related to certain taxa, or that encode certain functions, we can get an idea of the ecology and functioning of the sampled metagenome.

When the sample is composed mostly of viruses we talk of metaviromics. Viruses are the most abundant entities on earth and the majority of them are yet to be discovered. This means that the fraction of viruses that are described in the databases is a small representation of the actual viral diversity. Because of this, a high percentage of the sequencing data in metaviromic studies show no similarity with any sequence in the databases. We sometimes call this unknown, or at least uncharacterizable fraction as viral dark matter. As additional viruses are discovered and described and we expand our view of the Virosphere, we will increasingly be able to understand the role of viruses in microbial ecosystems.

Today we will re-analyze the metaviromic sequencing data from 2010 where the crAssphage, the most prevalent bacteriophage in humans, was described for the first time. It was named after the cross-assembly procedure employed in the analysis. Besides replicating the cross-assembly, today we will follow an alternative approach using state-of-the-art bioinformatic tools that will allow us to get the most out of the samples.

The dataset for the workshop

During this workshop you will re-analyze the metaviromic sequencing data from Reyes et al., 2010 where the crAssphage, the most prevalent bacteriophage among humans, was described for the first time.

In this study, shotgun sequencing was carried out in the 454 platform to produce unpaired (also called single-end) reads. Raw sequencing data is usually stored in FASTQ format, which contains the sequence itself and the quality of each base. Check out this video to get more insight into the sequencing process and the FASTQ format. To make things quicker, the data you are going to analyze today is in FASTA format, which does not contain any quality information. In the FASTA format we call header, identifier or just name to the line that precedes the nucleotide or aminoacid sequence. It always start with a > symbol and should be unique for each sequence.

Let’s get started by downloading and unzipping the file file with the sequencing data in a directory called 0_raw-data. After this, quickly inspect one of the samples so you can see how a FASTA file looks like.

# create the directory and move to it

$ mkdir 0_raw-data

$ cd 0_raw-data

# download and unzip

$ wget https://github.com/MGXlab/Viromics-Workshop-MGX/raw/gh-pages/data/day_1/Reyes_fasta.zip

$ unzip Reyes_fasta.zip

# show the first lines of a FASTA file

$ head F1M.fasta

You will use seqkit stats to know how the sequencing data looks like. It calculates basic statistics such as the number of reads or their length. Have a look at the seqkit stats help message with the -h option. Remember you can analyze all the samples altogether using the star wildcard (*) like this *.fasta, which literally means every file ended with ‘.fasta’ in the folder. Which are the samples with the maximum and minimum number of sequences? In overall, which are the mean, maximum and minimum lengths of the sequences?

# get basic statistics with seqkit

$ seqkit stats 0_raw-data/Reyes_fasta/*.fasta

Notice how the samples are named. Can you say if they are related in some way? Check the paper (Reyes et al Nature 2010) to find it out.

Key Points

Metagenomics is the culture-independent study of the collection of genomes from different microorganisms present in a complex sample.

We call dark matter to the sequences that don’t match to any other known sequence in the databases.

FASTA format does not contain sequencing quality information.

Next Generation Sequencing data is made of short sequences.

Metavirome assembly

Overview

Teaching: 20 min

Exercises: 40 minQuestions

What is a sequence assembly?

How is different a cross-assembly from a normal assembly?

Objectives

Run a cross-assembly with all the samples.

Assemble each sample separately and combine the results.

Assembly and cross-assembly

Sequence assembly is the reconstruction of long contiguous genomic sequences (called contigs or scaffolds) from short sequencing reads. Before 2014, a common approach in metagenomics was to compare the short sequencing reads to the genomes of known organisms in the database (and some studies today still take this approach). However, recall that most of the sequences of a metavirome are unknown, meaning that they yield no matches when are compared to the databases. Because of this, we need of database-independent approaches to describe new viral sequences. As bioinformatic tools improved, sequence assembly enabled recovery of longer sequences of the metagenomic datasets. Having a longer sequence means having more information to classify it, so using metagenome assembly helps to characterize complex communities such as the gut microbiome.

In this lesson you will assemble the metaviromes in two different ways.

Cross-Assembly

In a cross-assembly, multiple samples are combined and assembled together, allowing for the discovery of shared sequence elements between the samples. If a virus (or other sequence element) is present in several samples, its sequencing reads from the different samples will be assembled together in one contig. After this we can know which contigs are present in which sample by mapping the sequencing reads from each sample to the cross-assembly.

You will perform a cross-assembly as in Dutilh et al., 2014. For this, merge the sequencing reads from all the samples into one single file called all_samples.fasta. We will use the assembler program SPAdes (Bankevich et al., 2012), which is based on de Bruijn graph assembly from kmers and allows for uneven depths, making it suitable for metagenome assembly. As stated above, this cross-assembly will combine the metagenomic sequencing reads from all twelve viromes into contigs. Because of the data we have, we will run SPAdes with parameters --iontorrent and --only-assembler, and parameters -s and -o for the input and output. Look at the help message with spades.py -h to know more details about the parameters. Look at the questions below while the command is running (around 10 minutes).

# merge the sequences

$ cat *.fasta > all_samples.fasta

# create a folder for the output cross-assembly

$ mkdir -p 1_assemblies/cross_assembly

# complete the spades command and run it

$ spades.py -o 1_assemblies/cross_assembly ...

Ion Torrent is a sequencing platform, as well as 454, the platform used to sequence the data you are using. Note well we used --iontorrent parameter when running SPAdes. This is because there is not a parameter --454 to accommodate for the peculiarities of this platform, and the most similar is Ion Torrent. Specifically, both platforms are prone to errors in homopolymeric regions. Have a look at this video from minute 06:50, and explain what is an homopolymeric region, and how exactly the Ion Torrent and 454 platforms fail on them.

Regarding the --only-assembler parameter, we use it to avoid the read error correction step, where the assembler tries to correct single base errors in the reads by looking at the k-mer frequency and quality scores. Why are we skipping this step?

Separate assemblies

The second approach consists on performing separate assemblies for each sample and merging the resulting contigs at the end. Note well if a species is present in several samples, this final set will contain multiple contigs representing the same sequence, each of them coming from one sample. Because of this, we will further de-replicate the final contigs to get representative sequences.

If you wouldn’t know how to run the 12 assemblies sequentially with one command, check block below. Else, create a folder 1_assemblies/separate_assemblies and put each sample’s assembly there (ie. 1_assemblies/separate_assemblies/F1M). Use the same parameters as in the cross-assembly.

Process multiple samples sequentially

Sometimes you need to do the same analysis for different samples. For those cases, instead of waiting for one sample to finish to start off with the next one, you can set a command to process all of them sequentially.

First you need to define a variable (ie. SAMPLES) with the name of your samples. Then, you can use a

forloop to iterate the samples and repeat the analysis command, which is everything between;doand the;done. Note well the sample name is just the suffix of the input and output and you still need to add the proper directory and file extension.Let’s say you have sequencing reads in the files

sample1.fastq,sample2.fastqandsample3.fastq, each of them representing a sample. You want to align them to a given genome using bowtie2 and save the output toalignments/sample1_aligned.sam,alignments/sample2_aligned.samandalignments/sample3_aligned.sam. You could do this:# define a variable with the names of the samples export SAMPLES="sample1 sample2 sample3" # iterate the sample names in SAMPLES for sample in $SAMPLES; do bowtie2 -x genome_index -1 ${sample}.fastq -S alignments/${sample}_aligned.sam ; done

Once the assemblies had finished, you will combine their scaffolds in a single file.

The identifier of a contig/scaffold from SPAdes has the following format (from the SPAdes manual): >NODE_3_length_237403_cov_243.207, where 3 is the number of the contig/scaffold, 237403 is the sequence length in nucleotides and 243.207 is the k-mer coverage.

It might happen that 2 contigs from different samples’ assemblies have the same identifier, and

recall from earlier this morning that

So, just in case, we will add the sample identifier at the beginning of the scaffolds identifiers

to make sure they are different between samples. Use the Python script rename_scaffolds.py

for this, which will create a scaffolds_renamed.fasta file for each sample’s assembly. Then,

merge the results into 1_assemblies/separate_assemblies/all_samples_scaffolds.fasta.

# download the python script

$ wget https://raw.githubusercontent.com/MGXlab/Viromics-Workshop-MGX/gh-pages/code/day1/rename_scaffolds.py

# include sample name in scaffolds names

$ python rename_scaffolds.py -d 1_assemblies/separate_assemblies

# merge renamed scaffolds

$ cat 1_assemblies/separate_assemblies/*/scaffolds_renamed.fasta > 1_assemblies/separate_assemblies/all_samples_scaffolds.fasta

To de-replicate the scaffolds, you will cluster them at 95% Average Nucleotide Identify (ANI) over 85% of the length of the shorter sequence, cutoffs often used to cluster viral genomes at the species level. For further analysis, we will use the longest sequence of the cluster as a representative of it. Then, with this approach we are:

- Clustering complete viral genomes at the species level

- Clustering genome fragments along with very similar and longer sequences

Look at the CheckV website and follow the steps under Rapid genome clustering based on pairwise ANI section to perform this clustering.

# create a blast database with all the scaffolds

$ makeblastdb ...

# compare the scaffolds all vs all using blastn

$ blastn ...

# download anicalc.py and aniclust.py scripts

$ wget https://raw.githubusercontent.com/MGXlab/Viromics-Workshop-MGX/gh-pages/code/day1/anicalc.py

$ wget https://raw.githubusercontent.com/MGXlab/Viromics-Workshop-MGX/gh-pages/code/day1/aniclust.py

# calculate pairwise ANI

$ python anicalc.py ...

# cluster scaffolds at 95% ANI and 85% aligned fraction of the shorter

$ python aniclust.py -o 1_assemblies/separate_assemblies/my_clusters.tsv ...

The final output, called my_clusters.tsv, is a two-columns tabular file with the representative sequence of the cluster in the first column, and all the scaffolds that are part of the cluster in the second column. Using cut and its -f parameter to put all the representatives names in one file called my_clusters_representatives.txt. Then, use seqtk subseq to grab the sequences of the scaffolds listed in my_clusters_representatives.txt and save them to my_clusters_representatives.fasta.

# put the representatives names in 'my_clusters_representatives.txt'

$ cut ... > 1_assemblies/separate_assemblies/my_clusters_representatives.txt

# extract the representatives sequences using seqtk

$ seqtk subseq ... > 1_assemblies/separate_assemblies/my_clusters_representatives.fasta

Scaffolding in SPAdes

Previously this morning you saw how, using paired-end reads information, contigs can be merged in scaffolds. However, we have been using the scaffolds during this

lesson. Identify a scaffold with clear evidence of merged contigs, and explain how is that possible if we are using single-end reads.Solution

Not the solution, but a hint ;) check the SPAdes manual

Key Points

With sequence assembly we get longer, more meaningful genomic fragments from short sequencing reads.

In a cross-assembly, reads coming from the same species in different samples are merged into the same contig.

Lunch break

Overview

Teaching: 55 min

Exercises: 0 minQuestions

Objectives

Key Points

Visualizing the assembly graph

Overview

Teaching: 20 min

Exercises: 30 minQuestions

How the k-mer size contributes to the conectivity of the graph?

Are related species connected in the graph?

Objectives

Understanding how k-mer size affects the topology of the graph

Understanding how the presence of similar species in the sample affects the graph

Effect of k-mer size

The choice of the size of k-mer has a great impact on the final assembly. When running SPAdes, you might have noticed it doesn’t use a single k-mer size per assembly but rather a range of k-mer sizes (21, 33 and 55 in this case), where each subsequent graph is built on the previous one. This is what they call a multisized de Bruijn graph. which benefits from the high connectivity of small k-mer sizes and the simplicity of the large ones. From Bankevich et al., 2012, smaller values of k collapse more repeats together, making the graph more tangled. Larger values of k may fail to detect overlaps between reads, particularly in low coverage regions, making the graph more fragmented. […] Ideally, one should use smaller values of k in low-coverage regions (to reduce fragmentation) and larger values of k in high-coverage regions (to reduce repeat collapsing). The multisized de Bruijn graph allows us to vary k in this manner.

Recall that k-mer size indicates the amount of overlap (k-1) that is necessary to perform the junction in the de Bruijn graph. The longer the k-mer is, the longer stretch of correct nucleotides are necessary to perform such junction. Knowing this, which k-mer sizes (small or large) are more affected by sequencing errors? Explain why.

We will use the Bandage tool to visualize

the graph. In the releases section,

follow instructions to download the most appropriate version. If you are in attending

the workshop live, download Bandage_Ubuntu-x86-64_v0.9.0_AppImage.zip).

To run it, unzip the file, open a new terminal tab (Ctrl + Shift + t), activate the conda

environment for today, and call Bandage from the terminal like this:

# run Bandage

$ ./Bandage_Ubuntu-x86-64_v0.9.0.AppImage

In File > Load_graph, navigate to any of the per sample assemblies and load

assembly_graph.fastg for the k-mer size 21. Then click Draw graph to see the

graph. Note well this graph has been already compacted by collapsing those nodes

that form linear, unbranched paths (click any large node to see how its length is

way larger than 21, the k-mer size). Without closing the Bandage window, open a new

terminal tab and run Bandage again to load the graph for the k-mer size 55. Answer

questions below:

- Why do we expect the graph to be very tangled with small k-mer sizes such as the K21?

- K55 graph seems easier to traverse, but note well this graph has been constructed using information from previous k-mers too. Can you think of any disadvantage of using only a large k-mer size to construct the graph? Would you expect high or low connnectivity?

Effect of related species

Often in metagenomic samples, a number of strains for a given species are present, and this is particularly evident with viral communities that typically contain an abundance of haplotypes (or quasispecies). Because of the high amount of homologous regions between these strains, the assembly graph is complex as multiple genomes occupy much of the same kmer space. The convergence-divergence structure in the graph generated by these homologous regions make traversing the graph more complex, and mistakes at this point can lead to chimeric contigs containing sequence from more than one strain. Note well the convergence-divergence structure is also observed in horizontal gene transfer events between any species.

The crAssphage in the graph

From Dutil et al., 2014 we know that one the viruses in this dataset is the prototypical crAssphage (p-crAssphage). Moreover, by mapping the sequencing reads back to the cross-assembly they could see a small contig recruiting reads from all the samples, suggesting that the genome where this contig comes from was present in all the samples. Or maybe related genomes that share that genomic sequence.

Let’s identify the p-crAssphage in the cross-assembly graph using Bandage and Blast. Download the p-crassphage genome as follows:

# download p-crassphage genome

$ wget https://raw.githubusercontent.com/MGXlab/Viromics-Workshop-MGX/gh-pages/code/day1/crAssphage.fasta

Then, run Bandage and load the cross-assembly graph under 1_assemblies/cross_assembly/assembly_graph.fastg.

Then, click Create/view BLAST search and use crAssphage.fasta as query. Colored

nodes are the ones showing similarity to the p-crAssphage. In a new Bandage window,

repeat the Blast analysis with the sample F2T1, which was used in the original paper

to reconstruct the p-crassphage. Can you explain what you see? To corroborate

your answer, inspect the assembly_graph.fastg file of F2T1 to know which scaffold

is the p-crassphage. After this, note if it clustered with any other scaffolds from

different samples.

Key Points

Assessing assemblies quality

Overview

Teaching: 15 min

Exercises: 25 minQuestions

Which assembly contains longer contigs?

Which assembly best represents the raw data (sequencing reads)?

Objectives

Assemblies assessment

Now we will measure some basic aspects of the assemblies, such as the fragmentation degree and the percentage of the raw data they contain. Ideally, the assembly would contain a single and complete contig for each species in the sample, and would represent 100% of the sequencing reads.

Fragmentation

Use the QUAST program (Gurevich et al., 2013) to assess how fragmented are the assemblies. Have a look at the possible parameters with quast -h. You will need to run it two times, one per assembly, and save the results to different folders (ie. quast_crossassembly and quast_separate)

# create a folder for the assessment

$ mkdir 1_assemblies/assessment

# run quast two times, one per assembly

$ quast -o 1_assemblies/assessment/<OUTPUT_FOLDER> ...

Raw data representation

You can know the amount of raw data represented by the assemblies by mapping the reads back to them and quantifying the percentage or reads that could be aligned. For this, use the BWA (cite) and Samtools (cite) programs. BWA is a short-read aligner, while Samtools is a suite of programs intended to work with mapping results. Mapping step requires you to first index the assemblies with bwa index so BWA can quickly access them. After it, use bwa mem to align the sequences to the assemblies and save the results in a SAM format file (ie. crossassembly.sam and separate.sam). Then use samtools view to convert the SAM files to BAM format (ie. crossassembly.bam and separate.bam), which is the binary form of the SAM format. Once you have the BAM files, sort them with samtools sort (output could be crossassembly_sorted.bam and separate_sorted.bam). Last, index the sorted BAM files to allow for an efficient processing, and get basic stats of the mapping using samtools flagstats.

# index the assemblies

$ bwa index <ASSEMBLY_FASTA>

# map the reads to each assembly

$ bwa mem ... > 1_assemblies/assessment/<OUTPUT_SAM>

# convert SAM file to BAM file

$ samtools view ...

# sort the BAM file

$ samtools sort ...

# index the sorted BAM file

$ samtools index ...

# get mapping statistics

$ samtools flagstats ...

Compare both assemblies

So far you have calculated some metrics to assess the quality of the assemblies, but bare in mind there also exist also others we can check for this, such as the number of ORFs or the depth of coverage across the contigs. In the report generated by Quast, look at metrics regarding scaffolds length, such as the N50. Can you explain the difference between both assemblies? Regarding the raw data containment, how different are both assemblies? Which metric do you find more relevant for metagenomics? Can you think of other metric to assess the quality of an assembly?

Key Points

Binning contigs

Overview

Teaching: 15 min

Exercises: 60 minQuestions

How many bins do we obtain?

Objectives

One of the drawbacks of the assembly step is that the actual genomes in the sample are usually split across several contigs. This can be a problem for analyses that benefit from having as much complete as possible genomes, such as metabolic pathways reconstruction or gene sharing analyses (check this). With binning we try to put together in a ‘bin’ all the contigs that come from the same genome, so we can have a better representation of it.

As you know from Arisdakessian et al 2021, two features are used to bin contigs: nucleotide composition and depth of coverage. You will use the CoCoNet binning software to bin the set of representative scaffolds you got in the previous lesson. For the nucleotide composition, CoCoNet computes the frequency of the k-mers (k=4 by default) in the scaffolds, taking into account both strands. For the depth of coverage, it counts the number of reads aligning to each scaffold, which at the end is a representation of the relative abundace of that sequence in the sample. This means that for the latter we need to provied CoCoNet with the short-reads aligned to the scaffolds, just as you did in the previous lesson. This time however you are not aligning all the samples altogether as if they were only one, but separately, so you should end up with 12 sorted BAM files. You can use the trick you learned in the previous lesson to process multiple samples sequentially. Don’t forget to index your sorted BAM files at the end.

# map (separately) all the sample to representative set of scaffolds

$ for sample in F*.fasta; do ... ... ... ; done

Once you have your BAM files, install and run CoCoNet with default parameters, and save the

results in the 3_binning folder. It should take 5-10 minutes. Look at questions

below in the meantime.

# deactivate the conda environment before running coconet

$ conda deactivate

# install coconet

$ pip install --user coconet-binning

# Run coconet. Don't forget to include your username in the command.

$ /home/<USERNAME>/.local/bin/coconet run --output 3_binning ...

Binning parameters

CoCoNet incorporates the cutoffs

--min-ctg-len(minimum length of the contigs) and--min-prevalence(minimum number of samples containing a given contig). By default, the first is set to 2048 nucleotides and the second to 2 samples. How increasing or decreasing these parameters would affect the results?

Binning results should be under 3_binning/bins_0.8-0.3-0.4.csv. If you have a look

at this file with head, each of the lines contains the name of a contig together

with the bin identifier, which is a number. Use the script create_fasta_bins.py

to create separate FASTA files for each bin, and save the results in 3_binning/fasta_bins.

# activate the environment again

$ conda activate day1_env

# download the python script

$ wget https://raw.githubusercontent.com/MGXlab/Viromics-Workshop-MGX/gh-pages/code/day1/create_fasta_bins.py

# Have a look at options

$ python create_fasta_bins.py -h

# run the script to create the FASTA bins with the correct options

$ python create_fasta_bins.py -o 3_binning/fasta_bins ...

You will be using these bins for days 3 and 4, so don’t forget where they are!

Key Points

Setup and run DeepVirFinder

Overview

Teaching: 15 min

Exercises: 15 minQuestions

Why do we use different environments for different tools?

How do we run DeepVirFinder?

Objectives

Create a conda environment from a .yaml file

Download DeepVirFinder and start a run

Today you will use different virus discovery tools and do a basic comparison of their performance on the assemblies that you created yesterday. To do this, we will heavily rely on conda to keep our computer environments clean and functional (Anaconda on Wikipedia). Conda enables us to create multiple separate environments on our computer, where different programs can be installed without affecting our global virtual environment.

Why is this useful? Most of the time, tools rely on other programs to be able to run correctly - these programs are the tool’s dependencies. For example: you cannot run a tool that is coded in Python 3 on a machine that only has Python 2 installed (or no Python at all!).

So, why not just install everything into one big global environment that can run everything? We will focus on two reasons: compatibility and findability. The issue with findability is that sometimes, a tool will not “find” its dependency even though it is installed. To reuse the Python example: If you try to run a tool that requires Python 2, but your global environment’s default Python version is Python 3.7, then the tool will not run properly, even if Python 2 is technically installed. In that case, you would have to manually change the Python version anytime you decide to run a different tool, which is tedious and messy. The issue with compatibility is that some tools will just plain uninstall versions of programs or packages that are incompatible with them, and then reinstall the versions that work for them, thereby “breaking” another tool (this might or might not have happened during the preparation of this course ;) ). To summarize: keeping tools in separate conda environments will can save you a lot of pain.

First, download the conda environments for today from here and use the file deepvir.yaml to create the conda environment for DeepVirFinder (Ren et al. 2020; DeepVirFinder Github).

# create the directory for today's tools and results

$ mkdir tools

$ mkdir results

# download and unzip the conda environemnts

$ wget https://github.com/MGXlab/Viromics-Workshop-MGX/raw/gh-pages/data/day_2/day2_envs.tar.gz

$ tar zxvf day2_envs.tar.gz

$ cd envs

# create the conda environment dvf for DeepVirFinder from deepvir.yaml

$ conda env create -f deepvir.yaml

This will take a couple of minutes. In the meantime, you can open another terminal window and download the DeepVirFinder github repository that contains the scripts.

# download the DeepVirFinder Github Repository

$ cd ~/ViromicsCourse/day2/tools/

$ git clone https://github.com/jessieren/DeepVirFinder

# Copy yesterday's contigs from the results

$ cp /path/to/yesterdays/scaffolds.fasta ~/ViromicsCourse/day2/

The file dvf.py inside the folder tools/DeepVirFinder/ contains the code to run DeepVirFinder. Once the conda environment has been successfully created, run DeepVirFinder on the contigs you have assembled yesterday (use the scaffolds from the cross-assembly, not the pooled separate assemblies). We want to focus on the most reliable contigs and will therefore only input contigs that are over 300 nucleotides in length (DeepVirFinder actually has an option that makes it pass sequences below a certain length, but some of the other tools do not have that option).

# Find out until which line you have to truncate the file to only include contigs longer than 300nt

$ less -N scaffolds.fasta

# While reading the file with less, you can look for a pattern by typing

/_length_300_

# Make truncated contig file (replace xxx with the last line number you want to include - the sequence of the last contig longer than 200nt)

$ head -n xxx ~/ViromicsCourse/day2/scaffolds.fasta > ~/ViromicsCourse/day2/scaffolds_over_300.fasta

# Activate the deepvir conda environment

$ conda activate dvf

# Run DeepVirFinder

(dvf)$ python3 dvf.py -i ~/ViromicsCourse/day2/scaffolds_over_300.fasta -o ~/ViromicsCourse/day2/results/

DeepVirFinder will now start writing lines containing which part of the file it is processing.

While DeepVirFinder is running, listen to the lecture.

Key Points

Different tools have different environments. Keeping them in separate environments makes runs reproducible and prevents a variety of problems.

We are running DeepVirFinder during the lecture, because the run takes ~50 minutes.

Listen to Virus Detection lecture

Overview

Teaching: 60 min

Exercises: 0 minQuestions

Objectives

Listen to Tina’s lecture.

Listen to Tina’s lecture while DeepVirFinder is running.

Key Points

Setup and run PPR-Meta

Overview

Teaching: 10 min

Exercises: 20 minQuestions

How do we install and run PPR-Meta?

How are PPR-Meta and DeepVirFinder different?

Objectives

Create the PPR-Meta conda environment

Run PPR-Meta

In the lecture you have heard what different strategies tools can use to detect viral sequences from assembled metagenomes. The overall objective of today is to test how these different strategies affect which sequences are annotated as viral and which ones are not.

The second tool we are going to run is called PPR-Meta (Fang et al. 2019). Like DeepVirFinder, PPR-Meta uses deep learning neural networks to annotate sequences as bacterial, viral, or plasmid. Both PPR-Meta and DeepVirFinder initially encode DNA sequences the same way: as a list of binary vectors. This means that each nucleotide is encoted as a binary vector, for example: A=[1,0,0,0], C=[0,1,0,0], and so on. This type of recoding categorical data in a binary way is known as one hot encoding. In DeepVirFinder, this encoding is used to learn genomic patterns. In essence, DeepVirFinder learns how often certain DNA motifs appear in each sequence by “sliding” a 10-nucleotide window over the sequence. The window is then compared to known motifs pre-trained model to decide whether a sequence should be classified as bacterial or phage. PPR-Meta is a little simpler: The DNA is encoded in the manner mentioned above. Then, PPR-Meta does the same thing for codons - in this case, the binary vectors each have a length of 64, as there are 64 different possible codons. The two resulting matrices are the input for the prediction of phage/plasmid/microbial sequence.

Getting PPR-Meta to run is a little more work than DeepVirFinder. First, deactivate the dvf conda environment if you haven’t already.

# deactivate deepvir

(dvf)$ conda deactivate

$

Then, create a conda environment for pprmeta from the file pprmeta.yaml (If you cannot remember how to do this, look back to the previous lesson) and activate the new environment. Afterwards, you have to download the MATLAB Runtime from this website. Make sure that you download the linux version and specifically, version 9.4.

# unzip MCR

(pprmeta)$ unzip MCR_R2018a_glnxa64_installer.zip

# install MCR into the tools folder

(pprmeta)$ ./install -mode silent -agreeToLicense yes -destinationFolder ~/ViromicsCourse/day2/tools

The installation will take a little while. In the meantime, to better understand the differences between the tools, read the description of how they work in their publications.

PPR-Meta - Read at least the sections Dataset construction and Mathematical model of DNA sequences

DeepVirFinder - Read the sections DeepVirFinder: viral sequences prediction using convolutional neural networks, Determining the optimal model for DeepVirFinder, and the first two paragraphs of section Predicting viral sequences using convolutional neural networks (don’t worry about the math to much).

Next, download PPR-Meta and make the main script into an executable.

# download PPR-Meta

(pprmeta)$ git clone https://github.com/zhenchengfang/PPR-Meta.git

(pprmeta)$ cd PPR-Meta

# make main script into an executable

(pprmeta)$ chmod u+x ./PPR-Meta

Finally, we have to edit the so-called LD_LIBRARY_PATH, a variable that contains a list of filepaths. This list helps PPR-Meta to find the MCR files that it needs to run, as the program doesn’t “know” where MCR is installed. PPR-Meta provides a small sample dataset that you can test the installation on.

# Add MCR folders to LD_LIBRARY_PATH

# Note: If you have another version of MCR installed in your conda version, you might need to unset the library path first using: unset LD_LIBRARY_PATH

(pprmeta)$ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/ViromicsCourse/day2/tools/v94/runtime/glnxa64

(pprmeta)$ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/ViromicsCourse/day2/tools/v94/bin/glnxa64

(pprmeta)$ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/ViromicsCourse/day2/tools/v94/sys/os/glnxa64

(pprmeta)$ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/ViromicsCourse/day2/tools/v94/extern/bin/glnxa64

# You can test whether PPR-Meta is running correctly by running

(pprmeta)$ cd ~/ViromicsCourse/day2/tools/PPR-Meta

(pprmeta)$ ./PPR-Meta example.fna results.csv

If the test run finishes successfully, then you can run PPR-Meta on the truncated contig file.

# Run PPR-Meta on contigs

(pprmeta)$ ./PPR-Meta ../../scaffolds_over_300.fasta ../../results/scaffolds_over_300_pprmeta.csv

PPR-Meta is very fast - your run should only take a couple of minutes. When it’s finished, move on to the next section. If you don’t have the database for virsorter on your computer yet, you can start the download in the background now. You can download the data here and unzip it after the download.

Key Points

Setting up the right conda environment for a tool can be tricky.

PPR-Meta runs much faster than DeepVirFinder.

Setup and Run VirFinder

Overview

Teaching: 15 min

Exercises: 15 minQuestions

How do we run VirFinder and how is it different from the other tools?

Objectives

Setup and Run VirFinder

Before we start analyzing the results from DeepVirFinder and PPR-Meta, we will run a third tool: VirFinder (Ren et al. 2017). As you might be able to tell from the name, VirFinder is DeepVirFinder’s predecessor. VirFinder uses a different type of machine learning to identify viruses: logistic regression. Logistic regression is best used for qualitative prediction, that is, to decide which of two classes an object belongs to. VirFinder does this using kmer frequencies. So, VirFinder looks at each kmer of length 8 within each sequence and builds a matrix of which kmers were found and how frequently. Based on the comparison between this kmer matrix and the training data, VirFinder makes a prediction of whether a sequence belongs to a virus or a microbe.

Based on your experience so far, will this method be faster or slower than the tolls that you have run before?

To setup VirFinder, first create the conda environment from virfinder.yaml (don’t forget to deactivate the pprmeta environment if you are still using it).

If you had trouble running it before lunch, you can download the updated environment here

Then, within the virfinder environment run rstudio.

# Run rstudio GUI within the virfinder environment

$ rstudio

The next few commands will be in R.

# Attach the VirFinder package

> library("VirFinder")

# Run VirFinder on our dataset

> predVirFinder <- VF.pred('~/ViromicsCourse/day2/scaffolds_over_300.fasta')

# Save the resulting data frame as a comma-separated textfile

> write.csv(predResult, "~/ViromicsCourse/day2/results/scaffolds_over_300_virfinder.csv", row.names=F)

Discussion: Logistic Regression

Is VirFinder faster or slower than you predicted? What could be the reason for why it is so fast?

Where would you draw the decision boundrary?

Stay in RStudio for the next section.

Key Points

Logistic Regression is another type of Machine Learning than can be used to distinguish between viral and non-viral sequences

Comparing Virus Identification Tools

Overview

Teaching: 15 min

Exercises: 15 minQuestions

How can we compare the results of different virus identification tools?

Which tool finds more phages?

Why would the tools disagree?

Objectives

Read the results so far into data frames.

Find out how many viral sequences each tool has predicted.

Discuss how moving the decision boundrary in VirFinder and DeepVirFinder affects your result.

Before we run the final tool over lunch, we will shortly inspect the results of the three tools we have run so far. The following steps are marked as challenges, so that you can try for yourself if you already have experience with R. If you do not have experience with R or you don’t have a lot of time before lunch, feel free to use the solution to continue.

The results of VirFinder should already be in your R workspace in a dataframe called predVirFinder. If you have closed RStudio after the last section, load the VirFinder results into R from the csv file that you have created in the last section, the same way as the others.

Challenge: Loading the results of DeepVirFinder and PPR-Meta into R

You have successfully run two tools apart from VirFinder, which have produced a comma-separated file (DeepVirFinder) and a tab-separated file (PPR-Meta). Load them into your R workspace.

Hint: Make sure your working directory is set correctly

Solution

# Load DeepVirFinder results > predDeepVir <- read.csv('~/ViromicsCourse/day2/results/scaffolds_over_300_deepvirfinder.csv') # Load PPR-Meta results > predPPRmeta <- read.table('~/ViromicsCourse/day2/results/scaffolds_over_300_pprmeta.txt', header=T)

Discussion: Comparing Tools

Look at the three data frames you have created. What kind of information can you find in there? Is it clear to you what each of the values mean?

Think of ways to compare the results of the three tools. How would you decide which of these three tools is best suited for your research?

Challenge: Counting phage annotations

One way to compare tools would be to compare how many sequences are annotated as phages. Do that for DeepVirFinder, PPR-Meta, and VirFinder. Given what you know about the dataset: How many sequences do you expect to be viral? How many of the scaffolds are annotated as viral by each tool?

Solution

For PPR-Meta, counting the number of sequences annotated as phages is the most straightforward.

# Sum up all the rows that are annotated as a phage > sum(predPPRmeta$Possible_source =='phage')For VirFinder and DeepVirFinder, counting the number of sequences annotated as phages is more complicated. The simplest way would be to count how many sequences have a score above 0.5.

# Sum up all the rows that have a score above 0.5 > sum(predDeepVirFinder$score > 0.5) > sum(predVirFinder$score > 0.5)However, you might have seen that there is also a p-value available for the VirFinder and DeepVirFinder results. You might want to use them instead to decide whether you test your prediction.

# Sum up all the rows that have a p-value of max 0.05 > sum(predDeepVirFinder$pvalue <= 0.05) > sum(predVirFinder$pvalue <= 0.05) # Or, you might want to be even stricter and count only sequences with a p-value of max 0.01 > sum(predDeepVirFinder$pvalue <= 0.01) > sum(predVirFinder$pvalue <= 0.01)

Discussion: Decision boundraries

With VirFinder and DeepVirFinder, you have seen that the decision boundrary is somewhat up to the user. How will moving the decision boundrary “up” (i.e. making it stricter) or “down” affect the results?

If instead of a viromic dataset you were looking at a gut microbiome dataset, how would that affect your choice for the decision boundrary?

Can you already tell which of these three tools is the best?

Key Points

Despite out data being almost exclusively viral, the tools identify max. 2/3 of the sequences as viral.

Making the decision boundrary less strict will include more sequences, which might seem like an advantage in this case. However, if we were working with a mixed metagenomic dataset, this would mean that we would falsely annotate microbial sequences as viral.

Setup and run VirSorter

Overview

Teaching: 10 min

Exercises: 20 minQuestions

How do we install and run VirSorter?

How is VirSorter different than the previous tools?

Objectives

Install and run VirSorter

The last virus identification tool, which we will run over lunch, is called VirSorter (Roux et al. 2015). VirSorter is different from the other tools in that it actually considers homology in predicting whether a contig belongs to a phage or a microbe. VirSorter distinguishes between “primary” and “secondary” metrics when deciding how to annotate a sequence. Primary metrics are the presence of viral hallmark genes an their homologs, and the enrichment of known viral genes. Secondary metrics include an enrichment in uncharacterized genes, depletion of PFAM-affiliated genes, and two metrics of genome structure.

Challenge: Viral genome structure

VirSorter uses two genome structure metrics to distinguish phage sequences from bacterial sequences. Can you think of viral genome structure metrics that could be useful for prediction?

Solution

VirFinder uses:

- an enrichment of short genes

- depletion in strand switch

To run VirSorter, first create the necessary environment from virsorter.yaml and activate it. Then, download virsorter into the day2/tools folder. Note that the following code is in bash again.

# Install Virsorter

$ cd ~/ViromicsCourse/day2/tools

$ git clone https://github.com/simroux/VirSorter.git

$ cd VirSorter/Scripts

$ make clean

$ make

# Make symbolic link of executable scripts in the environment's bin

# It is important to use the absolute path and not the relative path to the Scripts folder (replace XXX with the number of your account, or replace the absolute path with the path to your own anaconda if you join online)

$ ln -s ~/ViromicsCourse/day2/tools/VirSorter/wrapper_phage_contigs_sorter_iPlant.pl /mnt/local/prakXXX/anaconda3/envs/virsorter/bin

$ ln -s ~/ViromicsCourse/day2/tools/VirSorter/Scripts /mnt/local/prakXXX/anaconda3/envs/virsorter/bin

Finally, install metagene_annotator into the conda environment.

# Install metagene_annotator

$ conda install metagene_annotator -c bioconda

Finally, run VirSorter. Note that VirSorter is very particular about its working directory. It is best if it doesn’t exist beforehand (VirSorter will create it). If a run fails, then completely remove the working directory before you restart it.

# Run VirSorter

# Under the argument --data-dir put the link https://blahblah.com/virsorter-data

$ wrapper_phage_contigs_sorter_iPlant.pl -f ~/ViromicsCourse/day2/scaffolds_over_300.fasta --db 1 --wdir ~/ViromicsCourse/day2/results/virsorter --ncpu 1 --data-dir ~/ViromicsCourse/day2/tools/virsorter-data

If your run fails because “Step 1 failed”, then check the error file in ~/ViromicsCourse/day2/results/virsorter/logs/. If the error is “Can’t locate Bio/Seq.pm in @inc (you may need to install the Bio::Seq module)…”, then you need to copy a perl folder in the virsorter environment folder.

# Error fix for Can't locate Bio/Seq.pm in @inc

$ cd /mnt/local/prakXXX/anaconda3/envs/virsorter/lib/

$ cp -r perl5/site_perl/5.22.0/Bio/ site_perl/5.26.2/x86_64-linux-thread-multi/

Then try to run the command again. If you have more problems, let us know. Guten Appetit! Eet smakkelijk! Have a good lunch!

Key Points

VirSorter is a homology-based tool.

Because Virsorter has to compare each sequence to a database, it is slower that many other tools.

Lunch Break

Overview

Teaching: 50 min

Exercises: 0 minQuestions

What is the tastiest food we can find in the vicinity?

Objectives

Find the tastiest food in the vicinity.

Take a Lunch break of ~45 minutes.

Key Points

Nutrition is important

Guten Appetit!

Compare Results for four tools

Overview

Teaching: 60 min

Exercises: 60 minQuestions

How different are the predictions of the tools per contig?

How sensitive are the different tools we used?

Objectives

Assess whether the tools agree or disagree on which contigs are viral.

Compare the sensitivity of virus detection for the four tools.

Find out which tools make the most similar predictions.

Now that VirSorter has finished, take a look at the results (the main results file is called VIRSorter_global-phage-signal.csv. What kind of information can you find there?

If you couldn’t run VirSorter because of time or because you couldn’t download the database, you can downoad the virsorter results from here and unzip it using tar -xzvf.

Because the output of VirSorter looks a lot different compared to the output of the other tools, we will first reformat it into a similar file. Download the script reformat_virsorter_result.py from here. This script will read in the VirSorter results, add the contigs that were not predicted as phages, and add an arbitrary score to each prediction. A phage annotation that was predicted as “sure” (categories 1 and 4) gets a score of 1.0, a “somewhat sure” (categories 2 and 5) prediction gets a score of 0.7, and a “not so sure” (categories 3 and 6) prediction gets a score of 0.51. All other sequences get a score of 0.

Do you think these score represent the predictions well? Why (not)?

This script is used in the format:

# Format the virsorter result

$ python3 reformat_virsorter_result.py /path/to/virsorter_result_file.csv /path/to/output/file.csv

# This only works if the VirSorter result file is in its original place in the virsorter-results folder

# If you run the script from within the virsorter-results folder, give the filepath as ./VIRSorter_global-phage-signal.csv

You can now read the new csv file into R, e.g. as predVirSorter.

Count how many contigs are predicted to be phages (If you have trouble with this, look back to section 2.5). Can you explain the difference in numbers?

Now, let’s compare all the tools directly to each other. Again, all the exercises will be marked as challenges so that you can figure out the code for yourself or use the code we provide.

Challenge: Creating a dataframe of all results

First, create a data frame that is comprised of the contigs as rows (not as rownames though), and contains a column for each tool. Create a data frame that contains 1 if a sequence is annotated as phage by a tool and 0 if not. For VirFinder and DeepVirFinder, use a score of >0.5 as decision boundrary.

Solution

# Create the data frame and rename the column for the contig names > pred<-data.frame(predDeepVirFinder$name) > names(pred)[names(pred) == "predDeepVirFinder.name"] <- "name" # Add the PPR-Meta results > pred$pprmeta<-predPPRmeta$Possible_source # Change the values in the pprmeta column so that phage means 1 and not a phage means 0 > pred$pprmeta[pred$pprmeta =='phage'] <- 1 > pred$pprmeta[pred$pprmeta !=1] <- 0 # Add the DeepVirFinder and VirFinder Results > pred$virfinder<-predVirFinder$score > pred$virfinder[pred$virfinder >=0.5] <- 1 > pred$virfinder[pred$virfinder <0.5] <- 0 > pred$deepvirfinder<-predDeepVirFinder$score > pred$deepvirfinder[pred$deepvirfinder >=0.5] <- 1 > pred$deepvirfinder[pred$deepvirfinder <0.5] <- 0 # Add the VirSorter results > pred$virsorter<-predVirSorter$pred

We have seen that some tools annotate more contigs as viral than others. However, that doesn’t automatically mean that the contigs that are annotated as viral are the same. In the next step, we will see whether the tools tend to agree on which sequences are viral and which aren’t.

Challenge: Visualize the consensus of the tools

From the data frame, create a heatmap. Since there are thousands of contigs in the data frame, we cannot visualize them all together. Therefore, make at least three different heatmaps of 50 contigs each, choosing the contigs you want to compare. How do you expect, the comparison will vary?

Solution

# Select three ranges of 50 contigs each > pred.long<-pred[1:50,] > pred.medium<-pred[2500:2550,] > pred.short<-pred[8725:8775,] # Attach the packages necessary for plotting and prepare the data > library(ggplot2) > library(reshape2) > pred.l.melt<-melt(pred.long, id="name") > pred.m.melt<-melt(pred.medium, id="name") > pred.s.melt<-melt(pred.short, id="name") # Plot the three heatmaps, save, and compare > ggplot(pred.l.melt, aes(variable, name, fill=value))+geom_tile() > ggsave('~/ViromicsCourse/day2/results/contigs_large_binary.png', height=7, width=6) > ggplot(pred.m.melt, aes(variable, name, fill=value))+geom_tile() > ggsave('~/ViromicsCourse/day2/results/contigs_medium_binary.png', height=7, width=6) > ggplot(pred.s.melt, aes(variable, name, fill=value))+geom_tile() > ggsave('~/ViromicsCourse/day2/results/contigs_short_binary.png', height=7, width=6)

Discussion: Consensus

Do the tools mostly agree on which sequences are viral?

How would you explain that?

Is the degree of consensus the same across different contig lengths?

What is your expectation about the scores for the contigs where the tools agree/disagree?

In the next step, do the same as before, but now instead of using a binary measure (each sequence either is annotated as viral or not), use a continuous measure, such as the score. Make sure to use the same rows as before.

Challenge: Visualize the difference in scores

As in the challenge above, create a data frame with a column that contains contig ids and a column per tool. This time, use the continuous score as measure.

Solution

# Create the data frame of prediction scores > predScores<-pred > predScores$deepvirfinder<-predDeepVirFinder$score > predScores$virfinder<-predVirFinder$score > predScores$pprmeta<-predPPRmeta$phage_score > predScores$virsorter<-predVirSorter$score # Select three ranges of 50 contigs each > predScores.long<-predScores[1:50,] > predScores.medium<-predScores[2500:2550,] > predScores.short<-predScores[8725:8775,] # Prepare the data for plotting > predScores.l.melt<-melt(predScores.long, id="name") > predScores.m.melt<-melt(predScores.medium, id="name") > predScores.s.melt<-melt(predScores.short, id="name") # Plot the three heatmaps, save, and compare # The color palette in scale_viridis_c is optional. Scale_viridis_c is a continuous colour scale that works well for distinguishing colours # If you prefer a binned colour scale, you can also consider using scale_viridis_b > ggplot(predScores.l.melt, aes(variable, name, fill=value))+geom_tile()+scale_viridis_c() > ggsave('~/ViromicsCourse/day2/results/contigs_large_continuous.png', height=7, width=6) > ggplot(predScores.m.melt, aes(variable, name, fill=value))+geom_tile()+scale_viridis_c() > ggsave('~/ViromicsCourse/day2/results/contigs_medium_continuous.png', height=7, width=6) > ggplot(predScores.s.melt, aes(variable, name, fill=value))+geom_tile()+scale_viridis_c() > ggsave('~/ViromicsCourse/day2/results/contigs_short_continuous.png', height=7, width=6)

Discussion: Differences in Score

Compare the heatmaps of continuous scores to the binary ones. Focus on the contigs for which the tools disagree. Are the scores more ambiguous for these contigs?

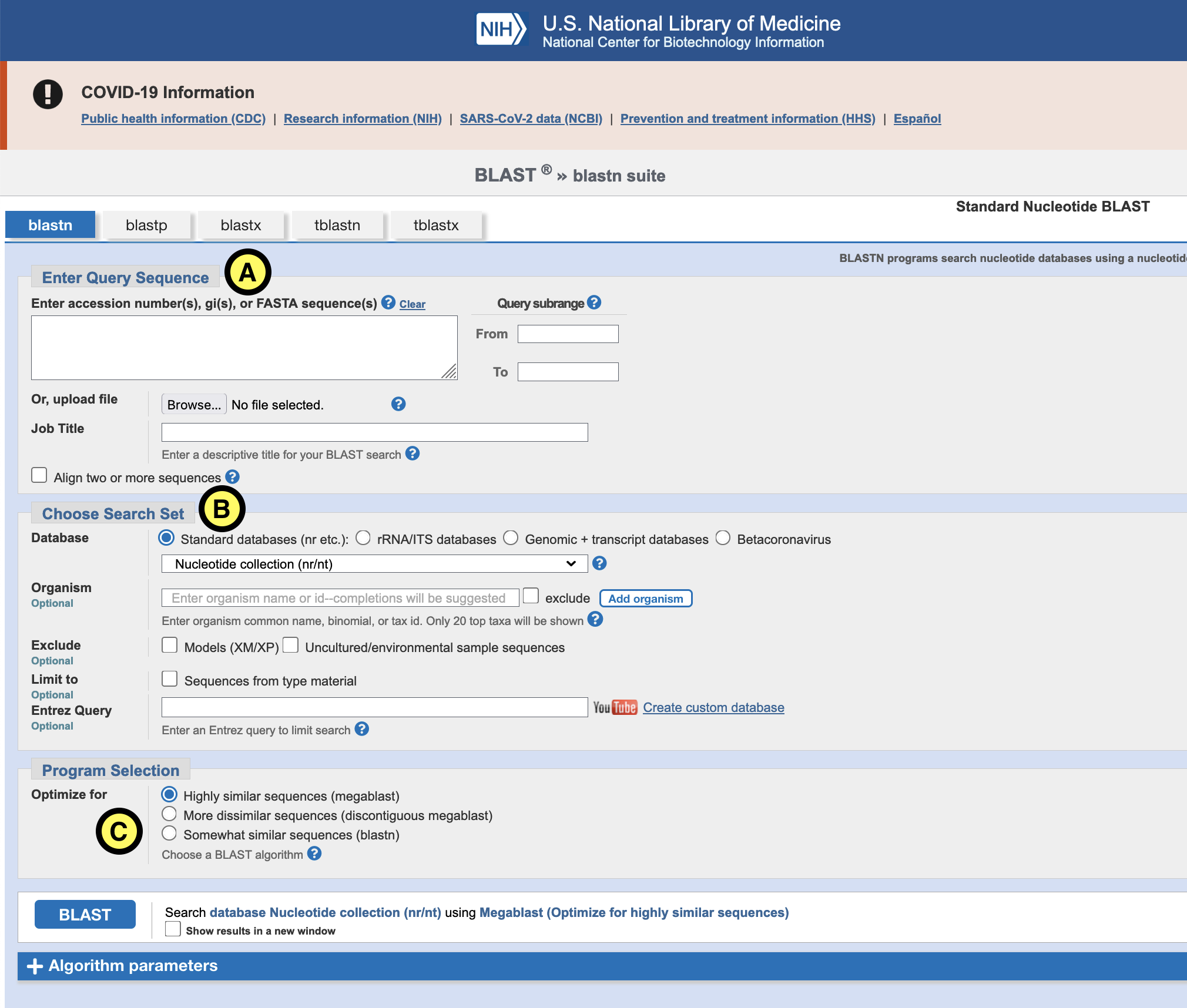

Pick out 1-5 contigs that you find interesting based on the tool predictions. Grab their sequences from the contig fasta file and go to the BLAST website. Pick out one or two BLAST flavours that you deem appropriate and BLAST the interesting contigs. If you have questions about running BLAST, don’t hesitate to ask one of us. What kind of organisms do you find in your hits?

We might also want to take a look at which tools make the most similar predictions. For this, we will make a distance matrix and a correlation matrix.

Challenge: Distance and Correlation Matrices

Find out which tools make the most similar predictions by making a euclidean distance matrix and a preason correlation matrix for all four tools.

Hint Don’t forget to exclude the first column from the distance and correlation functions.

Solution

# Make a euclidean distance matrix for binary annotation > pred.t <- t(pred[,2:5]) > dist.binary<-dist(pred.t, method="euclidean") # Make a euclidean distance matrix for continuous annotation > predScores.t <- t(predScores[,2:5]) > dist.cont<-dist(predScores.t, method="euclidean") # Make a correlation matrix for continuous annotation > dist.corr<-as.dist(cor(predScores[,2:5], method='pearson'))

Finally, let us calculate, for each tool, the sensitivity metric. Sensitivity is a measure for how many of the viral sequences are detected compared to how many viral sequences are in the dataset. So, if True Positives (TP) are correctly annotated viral sequences, and False Negatives (FN) are viral sequences that were not detected by a tool, then: Sensitivity= TP/(TP+FN)

Challenge: Sensitivity

Calculate the sensitivity for each tool. Since we are working with a viromics dataset, you can assume all sequences are viral.

Hint For VirFinder and DeepVirFinder, use a score of >0.5.

Solution

# PPR-Meta > number_of_detected_contigs/number_of_contigs # VirFinder > number_of_detected_contigs/number_of_contigs # DeepVirFinder > number_of_detected_contigs/number_of_contigs # VirSorter: number of sequences annotated as viral > sum(predVirSorter$pred==1) > sum(predVirSorter$pred==1)/number_of_contigs

If you have some extra time before our shared discussion, you can make some additional heatmaps for different ranges of contigs. You can also try and see how the correlation matrix changes when you include only longer/shorter contigs.

For the second part of this section, we will have a shared discussion about our research projects and how to choose the right tool for our purposes.

Key Points

The different tools often agree, but often disagree on whether a contig is viral. This is to an extent affected by the length of the contig.

Even for current state-of-the-art tools, getting a high sensitivity is hard.

Some tools make more similar predictions than others.

Prophage Prediction

Overview

Teaching: 20 min

Exercises: 20 minQuestions

How is prophage prediction similar/different from free virus prediction?

How do I find detailed information about my results in several output folders?

Objectives

Find out how tools detect prophages in a contig and how they indentify the phage-host boundraries.

Go through the VirSorter results and find out all the information you can get about a single prophage.

Identifying viral sequences from assembled metagenomes is not limited to entirely viral or entirely microbial sequences. Another important identification use case is detecting prophages. In fact, tools for detecting prophages were some of the first virus identification tools.

Discussion: Detecting prophages

Can you think of reasons why prophages might be easier to detect than free phages?

Look back at the evidence that VirSorter uses to determine whether a contig is viral or not. Which of these types of evidence are also suitable to identify prophages?

After you have though about why prophages might be easier to detect that free phages, read the section “Identification of virus-host boundraries” in this paper. The paper is about a tool called checkV that you will hear more about on Thursday. It estimates completeness and contamination of a viral genome, and it also predicts the boundraries between prophage and host genomes.

Challenge: Prophage annotation

As you have seen in the file VIRSorter_global-phage-signal.csv, VirSorter doesn’t just determine which contigs are viral, it also detects prophages. Go back to the file and look at the detected prophages (categories 4, 5, and 6). Can you find out whether they are complete or partial prophages? Or where the boundraries are?

Solution

Let’s look at category 4 (sure). We will focus on the first 6 columns of this section, for which the headers are:

“Contig_id, Nb genes contigs, Fragment, Nb genes,Category, Nb phage hallmark genes, Phage gene enrichment sig”

The corresponding values for the first contig are:

Contig_id: VIRSorter_NODE_50_length_17040_cov_25_975213

Nb genes contigs: 19

Fragment: VIRSorter_NODE_50_length_17040_cov_25_975213-gene_4-gene_16

Nb genes: 13

Category: 1

Nb phage hallmark genes: 2

Phage gene enrichment sig: gene_4-gene_16:2.21652167838327

So, we can see that genes 4-16 show phage gene enrichment and are predicted to be from a prophage. since we know that there are 19 genes that are classified “Nb” (Non-bacterial), we can assume that this phage is complete.

Next, try to find out, where the host-phage boundrary is in terms of nucleiotide position. This information is not available from VIRSorter_global-phage-signal.csv, so try to find it in the rest of the results. Are all the genes encoded on the same strand? How many nucleotides are between the genes?

Solution

This is a little bit complicated. Sometimes we have to dig to get the information that we want. As you have seen, for contig 50, the prophage is predicted to range from gene 4 to gene 16. In the folder “Metric_files”, there is a file which contains the coordinates of each gene on the contig. VirSorter recommends to use the coordinates for all the genes plus 50 nucleotides upstream of the most 3’ gene and 50 nucleotides downstream of the most 5’ gene. In the case of contig NODE_50, this means that the prophage coordinates would be 1014-15338.

Out of the 13 genes, in this region, there is only one gene on the “+”-strand, while twelve others are on the “-“-strand.

The intergenic spaces between the genes are very short, sometimes they even overlap. If you compare the length of the intergenic regions of the predicted prophage to the lenght of the intergenic regions outside of the predicted prophage, you will see that they are very similar. This is probably due to the fact that our dataset is a virome and so we expect almost all of our contigs to be viral and have short intergenic regions.

Now, let’s see if we can identify the taxonomy of the prophages that VirSorter predicted. Let’s try it with a simple BLAST search. In the folder Predicted_viral_sequences, you will find fasta files (.fasta) and genbank files (.gb) for each category of phage. The prophages are located in the files for categories 4-6. Run a Megablast (other flavours will overload the BLAST server for contigs of this length) for each category. Do you expect to find results?

Discussion

Do you think that a BLAST search is the best way to assign taxonomy to viruses? How many viruses do you think you can annotate this way?

Do you have an idea for other ways to assign taxonomy to viruses?

Today, you have learned a few methods for how bioinformatic tools distinguish between viral and non-viral sequences, even if the sequences are not similar to any known viruses. You have seen that homology-based methods are less sensitive than feature-based methods supported by machine learning. You have discussed the applications for more senstitive and less sensitive tools depending on the experiment that is conducted. And you have seen that even the best current tools will sometimes disagree on which sequences are viral and which aren’t.

We hope that the insights from today will help you understand the advantages and limitations of using different tools and will help you in choosing the right tool for your puropose in your own research.

Now, the last part of today is to listen to Lingyi’s lecture about the benchmarking of virus identification tools.

Key Points

Features such as GC-Content changes, or sudden enrichment in viral genes indicate the presence of a prophage in a contig/genome.

Results of a tool are sometimes distributed across multiple folders. Make sure to check all output files so that you can get the max out of your experiment.

Listen to Benchmarking lecture

Overview

Teaching: 60 min

Exercises: 0 minQuestions

Objectives

Listen to Lingyi’s lecture

Listen to Lingyi’s lecture about her benchmark of different virus identification tools.

Key Points

Introduction and setting up

Overview

Teaching: 15 min

Exercises: 0 minQuestions

Objectives

Function annotation

When studying microorganisms we are often interested in what they do, how they do that, and how this relates to what we already know. In this part of the course we will work our way from DNA sequences to proteins with functional annotations. To do so we will first predict genes using Prodigal, and then assign functions by comparing the sequences to the PHROG database.

Fist create a directory in your home directory called day3 (mkdir day3). We will work in this directory for the rest of the day.

Setting up

First we will create a new folder in the home directory, mkdir day3, in which we will work for the rest of this day.

We will look in detail at several bins and annotations today and to make the steps below comparable it is important that all of our bins and contigs have the same naming. While everyone should have the same data the naming might not be the same for everyone due to stochasticity in the tools (for example it might be bin1 for someone whereas the same data is in bin2 for someone else).

Data

I concatenated all the bin sequences (in all_binned_contigs.fasta) that we will download from Github (see below).

Make sure we are in ~/day3/ (cd day3) then run the code below

mkdir fasta_bins

cd fasta_bins

wget https://github.com/rickbeeloo/day3-data/raw/main/all_binned_contigs.fasta

wget https://raw.githubusercontent.com/rickbeeloo/day3-data/main/bin_01.fasta

wget https://raw.githubusercontent.com/rickbeeloo/day3-data/main/bin_62.fasta

cd ..

Conda

Like you did before we have to activate a conda environment

wget https://raw.githubusercontent.com/rickbeeloo/day3-data/main/day3_func.yaml

conda env create -f day3.yaml -n day3

conda activate day3

Rstudio

While a lot of packages are available on conda not all of them are. In this case we have to install two packages “manually”. Open rstudio (type rstudio on the command line) and then in the console (bottom of the screen) first type:

install.packages("bioseq")

then

install.packages("insect")

The installation might take a couple of minutes so in the meantime open a new terminal, activate the same environment (conda activate day3) and continue with the next exercise

Key Points

Gene prediction

Overview

Teaching: 30 min

Exercises: 15 minQuestions

Objectives

We will use Prodigal , a tool for “prokaryotic gene recognition and translation initiation site identification”, to identiy putative protein coding genes in our phage contigs. I purposely quoted the title as this hints that the tool is not specificallly built for phage gene predcition but for prokaryotes instead.

Why would Prodigal still perform well for phages?

For prodigal to be able to predict genes it has to be trained, for which it uses several sequence charcteristics, among others:

- Start codon usage (ATG, GTG, TTG)

- Ribosomal binding site (RBS) motif usage

- GC bias

- hexamer coding statistics

Prodigal algorithm

Have a look at the 2010 paper for a more detailed explanation of the algorithm.

- What does prodigal do when there is no so called “Shine Dalgarno” motif?

- Why is verifying the predictions difficult?

- What start codon(s) does prodigal use in its search?

Now we have a rough idea of how Prodigal works, go to the “metagenomes” section in the prodigal wiki

- What would be the best approach for our dataset?

Contigs length

The manual states: Isolated, short sequences (<100kbp) such as plasmids, phages, and viruses should generally be analyzed using Anonymous Mode. As a note, “Anonymous mode” was previously called “meta” mode. Are our phages shorter than 100kb? how long is the longest contig? Use

bioawkto find that out.Solution

bioawk -c fastx '{ print $name, length($seq) }' fasta_bins/all_binned_contigs.fasta

Running Prodigal

Lets run -p meta on all our contigs. Make sure we are in the day3 folder, then run the code below

mkdir prodigal_default

cd prodigal_default

prodigal -i ../fasta_bins/all_binned_contigs.fasta -a proteins.faa -o genes.txt -f sco -p meta

cd ..

- How many proteins did we predict? (use

grepfor example)- That we did not predict proteins does not mean they are not there. In what case do you think prodigal will miss proteins?

Key Points

Prodigal modes

Overview

Teaching: 30 min

Exercises: 30 minQuestions

The manual does state that normal mode would be preferable, as it will train the model for the sequence in question, however this would generally require >100kb sequence to obtain sufficient training data. As we can see from the previous step we do not have one that satisfies this threshold but bin_01||F1M_NODE_1_length_97959_cov_90.685580 with 97959nt comes close! So just to get an idea lets compare -p meta and “normal mode” for this bin.

Again make sure we are in ~/day3/, then run the code below

mkdir prodigal_comparison

cd prodigal_comparison

prodigal -i ../fasta_bins/bin_01.fasta -a proteins_default.faa -o genes_default.txt -f sco

prodigal -i ../fasta_bins/bin_01.fasta -a proteins_meta.faa -o genes_meta.txt -f sco -p meta

cd ..

Gene start

Load the genes_default.txt and genes_meta.txt in R (type rstudio) or Excel and see if you can spot differences between the two methods. I also wrote a script that will visualize the gene predictions on the contig. In Rstudio go to file > New file > Rscript and then you can copy the code from here and paste it - take a look at the script. It will read the gene coordinates from the two files (you have to adjust the paths), assign them to seperate groups (or the same group when in agreement) and then visualize these on the genome with gggenes.

Comparison

- Look at the gene comparison plot, what conclusions can we draw?*

- Look at the “combined” dataframe (

View(combined)), where do the predictions differ? start, middle or end of genes?Yesterday some people got weird symbols in their plot, if that is the case I also put the figure here.

I randomly chose one protein from the “combined” dataframe:

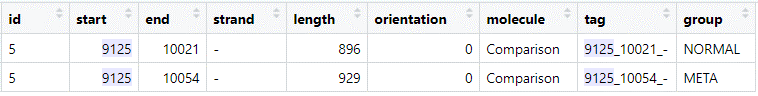

Both predictions have the same end position (note that this is on the reverse (-) strand), but differ for the start codon position.

Start codon position

- Think about what additional information we can use to figure out what the most likely start codon is.

We know that in order to replicate the virus has to hijack the hosts translation machinery and use that to syntheize proteins from its mRNAs. For the mRNA to be “detected” by the ribosome it has to bind the Ribosome Binding Site (RBS) - a sequence motif upstream of the start codon. This motif is not universally the same across the bacterial kingdom. For example, Escherichia coli utilizes the Shine-Dalgarno sequence (SD), whereas Prevotella bryantii relies on binding of ribosomal protein S1 to the unstructured 5’-UTR. Take some time to look at Figure 1 (and/or S5) of this paper to see what these motifs look like.

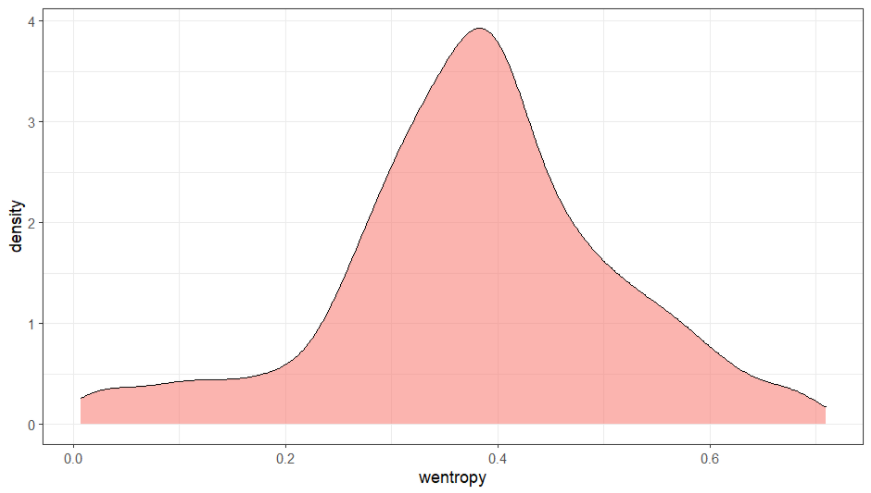

Now lets generate these motifs plots for both our meta and normal prodigal prediction. To do this we first have to extract all sequences upstream of the predicted start codons and then generate a sequence logo, for example using ggseqlogo. We already have the gene coordinate files, but we also need the contig sequence (bin_01.fasta) that we will read with bioseq. See this script on how to extract the upstream sequences and generate the sequence logos. It uses the data from the previous step (namely the default_genes and meta.genes dataframe) so you can paste it under the code from before.

Also again, think of changing the file path for bin_01.fasta when running the code.

Start codon position

- Compare the sequence logos to that in the paper above, could this tell you something about the host range of this phage?

Lets take a look at the upstream sequences of the “9125” gene:

META: `ACAAAAGTATGAACGTGGAGCACATAATA ATG` NORM: `GTAATAAAACTAGATATAAAAACTAATATT ATG`

Why do you think each mode chose this specific start codon? (remember what characetersitcs were used and the difference between

metaand normal - see the wiki)Based on the logos, which one would you pick?

In case you have weird symbols again, find the plot here.

Key Points

Functional annotation

Overview

Teaching: 30 min

Exercises: 30 minQuestions

We now know where our protein encoding sequences are located on the genome and what their amino acid sequence is, however, we do not know what their function in the phage is. To figure this out we will compare the by meta predicted protein sequences to the PHROG database using HHsuite.

First we have to download the HHsuite index from the PHROG website. The models in the index file only have an ID, like phrog_13, which is not informative by itself. Hence we also download the phrog_annot_v3.tsv to link the ID to annotations (e.g. terminase protein).

Downloading the phrog HH-suite and corresponding annotation table

Again make sure we are in ~/day3/, then run the code below

mkdir phrog

cd phrog

wget https://phrogs.lmge.uca.fr/downloads_from_website/phrog_annot_v3.tsv

wget https://phrogs.lmge.uca.fr/downloads_from_website/phrogs_hhsuite_db.tar.gz

tar -xvzf phrogs_hhsuite_db.tar.gz

cd ..

Annotated proteins

- How many phrog annotation did we download (see the tsv)?

Annotating the predicted proteins

Unfortunately we cannot just pass our default_proteins.faa to hhsuite (unlike hmmer search. We first have to create a FASTA file for each invidiual protein sequence to do this we will use seqkit.

cd prodigal_default

mkdir single_fastas

seqkit split -i -O single_fastas/ proteins.faa

Running hhblits on phrog

Now we can finally annotate the protein sequences! We have to be in the prodigal_default directory for this and the next step.

mkdir hhsearch_results

for file in single_fastas/*.faa; do base=`basename $file .faa`; echo "hhblits -i $file -blasttab hhsearch_results/${base}.txt -z 1 -Z 1 -b 1 -B 1 -v 1 -d ../phrog/phrogs_hhsuite_db/phrogs -cpu 1" ; done > all_hhblits_cmds.txt

parallel --joblog hhblits.log -j8 :::: all_hhblits_cmds.txt

HHblits

- For our search we use

hhblits, but we could have also usedhhsearch, what is the difference? (see the wiki)- You probably noticed the message “WARNING: Ignoring invalid symbol ‘*’, why is this happening?

Parsing the results

Now it is time to parse the results :) For each query (predicted protein) we grab the best match (from hhsearch_results), link the ID to the annotation (in phrog_annot_v3.tsv) and find the genomic location in our Prodigal output file (genes.txt). Get the Python script to do this from GitHub:

wget https://raw.githubusercontent.com/rickbeeloo/day3-data/main/parse_hmm_single.py

python3 parse_hmm_single.py hhsearch_results/ hhsearch_results.txt ../phrog/phrog_annot_v3.tsv genes.txt

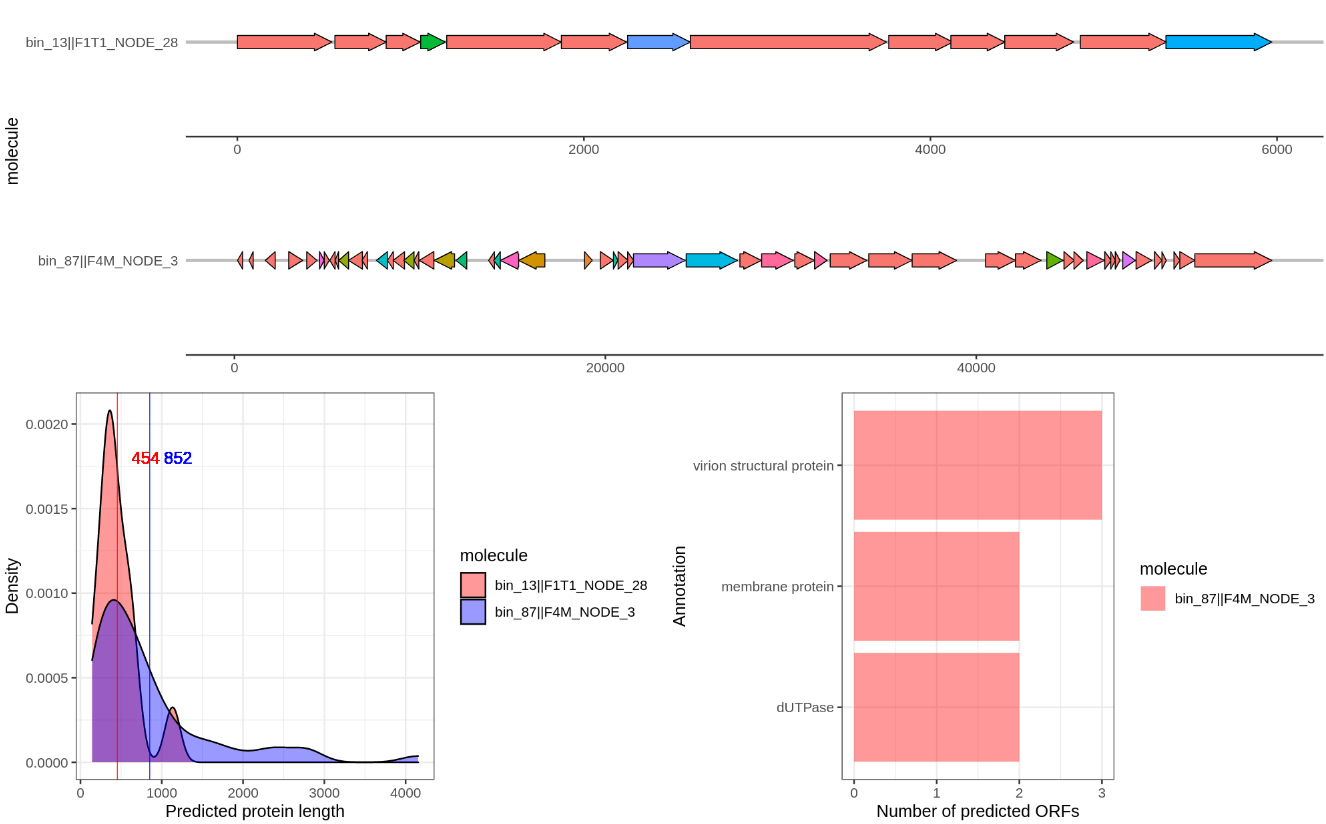

I also wrote a script that will visualize the gene annotation of two provided contigs using gggenes. You can find the script here.. Like before create a new Rscript and copy paste the code. The line df <- read.table(file.choose().... (line: x) will open a file dialog where you can choose the hhsearch_results.txt. Then run the while loop that will ask you to provide two contig identifiers. Just a unique part is sufficient, such as bin_01||F1M_NODE_1. I added the option to save to an output file in case of weird symbols again… For example it will produce a figure like this:

Compare several of the following contigs:

bin_87||F4M_NODE_3

bin_01||F1M_NODE_1

bin_62||F3M_NODE_2

bin_13||F1T1_NODE_28

HHblits

Take a closer look at the contigs

bin_01||F1M_NODE_1vsbin_62||F3M_NODE_2- both crassphages.

What do you notice in this comparison?

Go to Figure 7 of this paper, is this what you thought? How are we going to tackle this?

Annotating two contigs with a different translation table

In the paper a modified version of Prodigal is used. Instead of modifying Prodigal we will use translation table 15 where TAG is considered a coding codon instead of a STOP(*).

- How do we tell Prodigal to use this translation table? wiki

(NOTE: using a different translation table is not supported in meta mode, so we have to use the normal gene prediction here)

We have to go back to the ~/day3/ directory and then run the following

mkdir prodigal_dif_table

cd prodigal_dif_table

touch two_contigs.fasta

cat ../fasta_bins/bin_01.fasta > two_contigs.fasta

cat ../fasta_bins/bin_62.fasta >> two_contigs.fasta

prodigal -i two_contigs.fasta -a two_contig_proteins.faa -o two_contig_genes.txt -f sco -g 15

mkdir single_fastas

seqkit split -i -O single_fastas/ two_contig_proteins.faa

mkdir hhsearch_results

for file in single_fastas/*.faa; do base=`basename $file .faa`; echo "hhblits -i $file -blasttab hhsearch_results/${base}.txt -z 1 -Z 1 -b 1 -B 1 -v 1 -d ../phrog/phrogs_hhsuite_db/phrogs -cpu 1"; done > all_hhblits_cmds.txt

parallel --joblog hhblits.log -j8 :::: all_hhblits_cmds.txt

wget https://raw.githubusercontent.com/rickbeeloo/day3-data/main/parse_hmm_single.py

python3 parse_hmm_single.py hhsearch_results/ two_contig_hhsearch_results.txt ../phrog/phrog_annot_v3.tsv two_contig_genes.txt

Use the script from the previous step (this time loading the two_contig_hhsearch_results) and again visualize the contigs bin_01||F1M_NODE_1 vs bin_62||F3M_NODE_2.

Compare predictions

- How did the prediction change? and what about

bin_01||F1M_NODE_1?

Key Points

Lunch break

Overview

Teaching: 50 min

Exercises: 0 minQuestions

Objectives

Key Points

Clustering proteins

Overview

Teaching: 30 min

Exercises: 30 minQuestions

We now queried our protein sequences directly against the models from the PHROG database. To increase sensitivity of our search we could first build models, more specifically Hidden Markov Models (HMMs), ourselves and query these against the database. Take a look at the EBI description here to get an idea of what this will look like. To build these we will go through the following steps:

- Clustering similar protein sequences together (MMSEQ2)